WriteAssist

Improving Writing Education Using GenAI

MIDS Capstone Project, UC Berkeley

The Mission

Reduce teacher burnout and improve student writing proficiency by equipping teachers with a classroom-aligned AI assistant that automates feedback generation and supports students with 1:1 counseling.

What Sets Us Apart?

We aim to address the gap in holistic solutions for the classroom, the exchange between students and teachers, which is crucial to making impact on writing proficiency.

01

Systems are decoupled from the classroom environment in which they operate

02

Interaction with students is still a bottleneck, and feedback generation is typically not customizable

03

Existing systems often make assumptions about good writing, which can conflict with the teacher’s goals

A Novel Approach to GenAI DevOps

01

We developed an end-to-end pipeline to support a range of transparent experimentation

02

We tested a range of configurations across state of the art language models

03

Our live application showcases teacher and student views using our best solution

MVP Demo

GenAI Ops System

To study how our system behaves, we constructed a GenAIOps concept equipped with traceability, explainability, and tracking capabilities, implemented using dagster, MLFlow, and streamlit.

Inference System

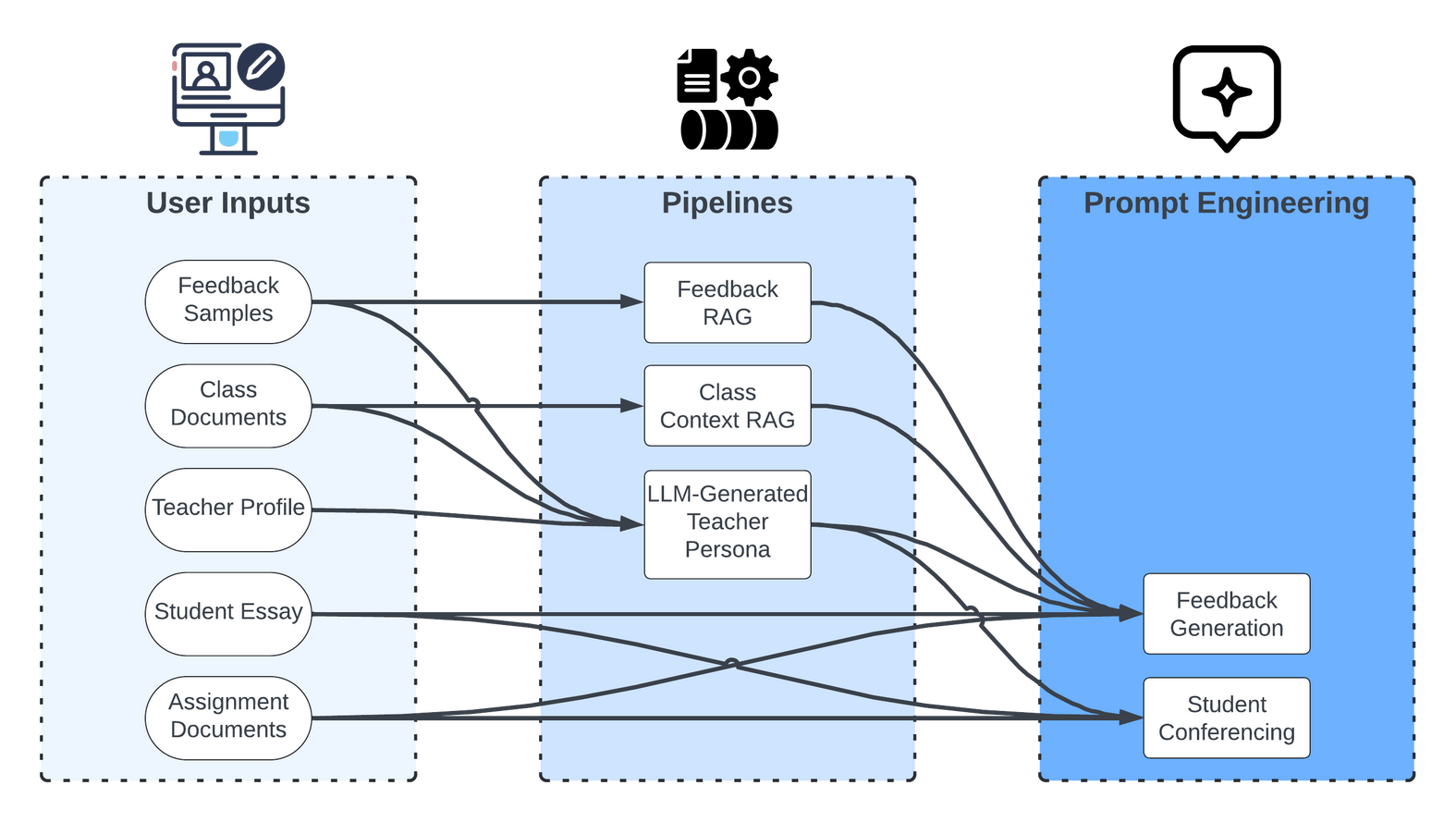

Our inference system starts with 5 user inputs: previous feedback samples from the teacher, class documents like syllabus and curriculum, a teacher profile which includes their onboarding responses, the student essay that the teacher is grading, and the documents associated with the essay assignment.

The artifacts from our pipelines are injected into the prompt layer of the feedback generation and student conferencing features, along with the essay and essay context. Our prompt engineering instructs the LLM to leverage the context we provide to personalize the response to the teacher and class.

RAG pipelines for teacher feedback examples and a RAG pipeline for class documents are fed along with class documents, and teacher profile into a pipeline that create an LLM-generated teacher persona artifact.

Ablations

Enhancements

LLMs

Chunking Strategies

Parameters

Prompt Engineering

30+ Hypotheses Tested

Our developer-facing solution enables data scientists to administer experimental treatments to an end-to-end GenAI system.

We track every pipeline run configuration in MLFlow along with the quantitative results. The tracking system logs important artifacts that we can explore in another UI app to understand cost drivers, provide additional explainability on LLMs and RAG pipelines, and qualitatively assess performance.

Dagster Pipelines

Tracking System

Artifact Store

Evaluation App

Experimentation Demo

Three Innovations

01

Developed a GenAI concept to tailor feedback generation to each teacher in a scalable, low-cost fashion

02

Leveraged the same artifacts produced by the feedback generation system to customize 1:1 student conferencing to the teacher and class

03

Combined machine learning theory, MLOps, and GenAI to construct a GenAIOps concept that allowed us to optimize over all components of our system in a scalable, transparent, and cost-efficient way

Our Capstone Team

Richard Mathews II

Project Manager/ Data Scientist

Emily McPherson

App Developer

Daphne Lin

Data Scientist

Sabreena Naser

Data Scientist

Patrick Xu

Data Scientist

Contact

Mr

richard.mathews@ischool.berkeley.edu

emcpherson@ischool.berkeley.edu

daphnelin@ischool.berkeley.edu

snaser1@ischool.berkeley.edu

patrick.xu@ischool.berkeley.edu

We would like to thank our advisers, Joyce Shen and Kira Wetzel, for their insightful feedback and support throughout the process.

Copyright © 2024 R. Mathews, E. McPherson, D. Lin, S. Naser, P. Xu